Table of contents

Introduction

One of my favorite things to do with APEX is to integrate REST APIs. Whether that means building one with ORDS, or consuming one, I love working with REST APIs because they can easily extend the functionality of your app or allow you to access remote data in a somewhat "standardized" way.

My go-to strategy for integrating a REST API into an APEX app is to add it as a REST Data Source so I don't have to write any code to parse the JSON or XML response and I can just start using the API in APEX right away.

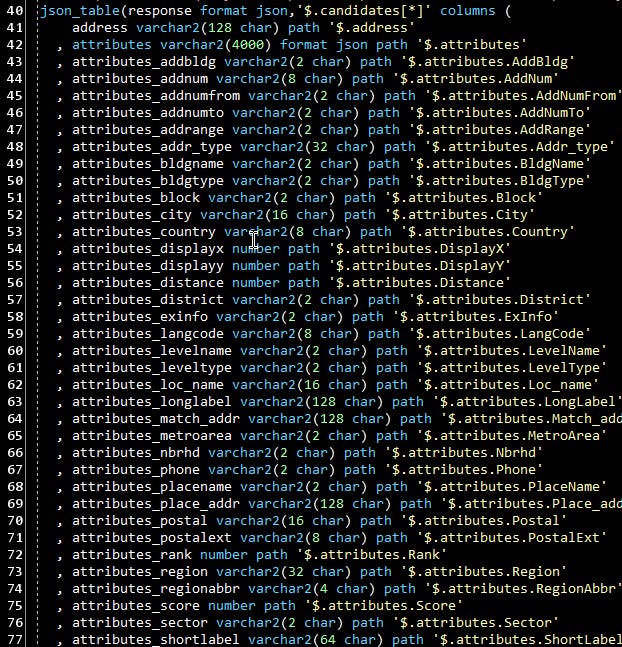

However, there's sometimes a need to go beyond what you can do declaratively in APEX and harness the full power of SQL and PL/SQL. When the time comes and I need to parse the JSON response from an API, I often found myself writing repetitive and tedious JSON_TABLE statements. If you JSON file has numerous columns, you could end up with something nasty like this:

If only there was an easier way that doesn't require writing the column definitions by hand... Once again, the APEX team has provided us with an excellent utility for the job: APEX_DATA_PARSER!

Now, I'm not going to dive into all the functionality of this package in this post, there are plenty of posts and docs about it. If you've never used it before, all you need to know is that this package lets you parse XML, JSON, CSV, and XLSX files without writing any code and returns the result as rows, so you can call this from SQL as if your file content was a regular old table!

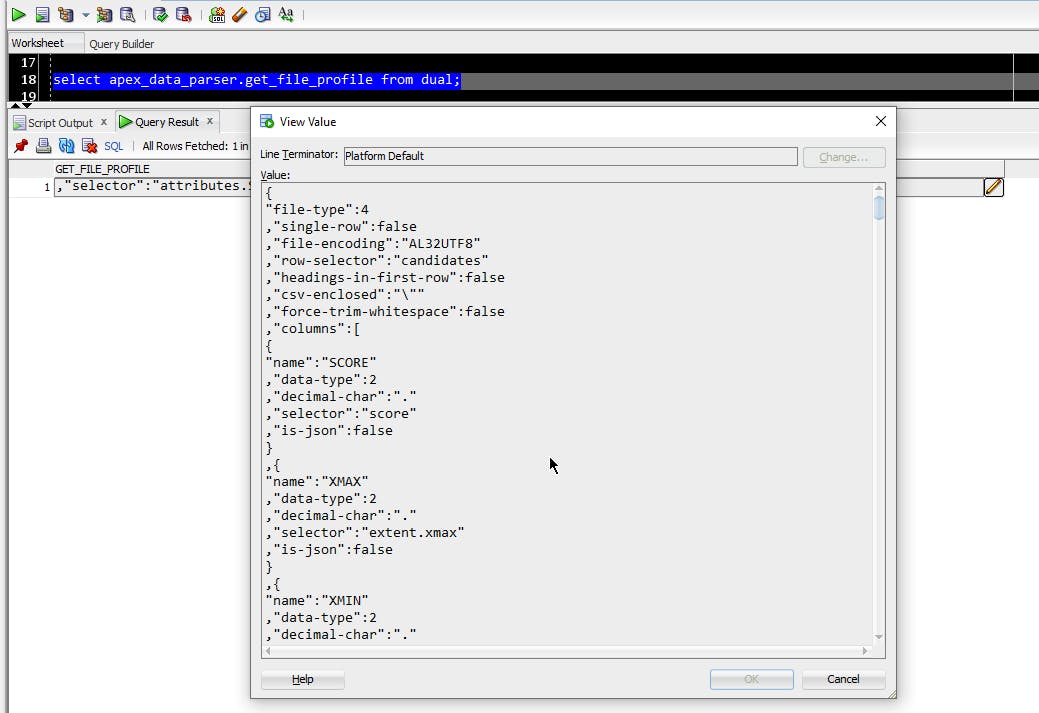

As if that wasn't enough magic, there's another function that lets you get the file profile of the parsed file. The file profile is a JSON file containing metadata about the parsed file including a list of parsed columns and their data types.

Since most of the work in writing a JSON_TABLE query involves defining the columns along with their data type and selector, this is very valuable information. With a little bit of SQL, we can use the data profile to generate our own JSON_TABLE queries.

How? Let's walk through the general steps:

- Parse your JSON document or response with APEX_DATA_PARSER.PARSE

- Get the profile from the APEX_DATA_PARSER.GET_FILE_PROFILE function

- Write a JSON_TABLE query to parse the profile and...

Wait a second Haniel, isn't this post about not writing JSON_TABLE statements manually?

Yes!

To save you time, I will provide a view I created to take care of steps 2, 3, and beyond for you.

All you need to do is download and install quick_json_table_vw.sql from my GitHub Gist: https://gist.github.com/hanielburton/7201b7ffbe77ee0a2e7dd202af31cbe8

Disclaimer, this tool is provided with no guarantee it will work or expectation of support. I built this for myself because I'm lazy and got tired of writing JSON_TABLE statements with a gazillion columns. It may or may not work perfectly for your needs. If it doesn't, please do let me know as I'm interested in improving this tool, but you're also more than welcome to modify and re-share!

The only dependency is APEX (19.1+), of course.

Step 1 is still required, the JSON file can come from anywhere so long as you pass it to the PARSE function as a BLOB.

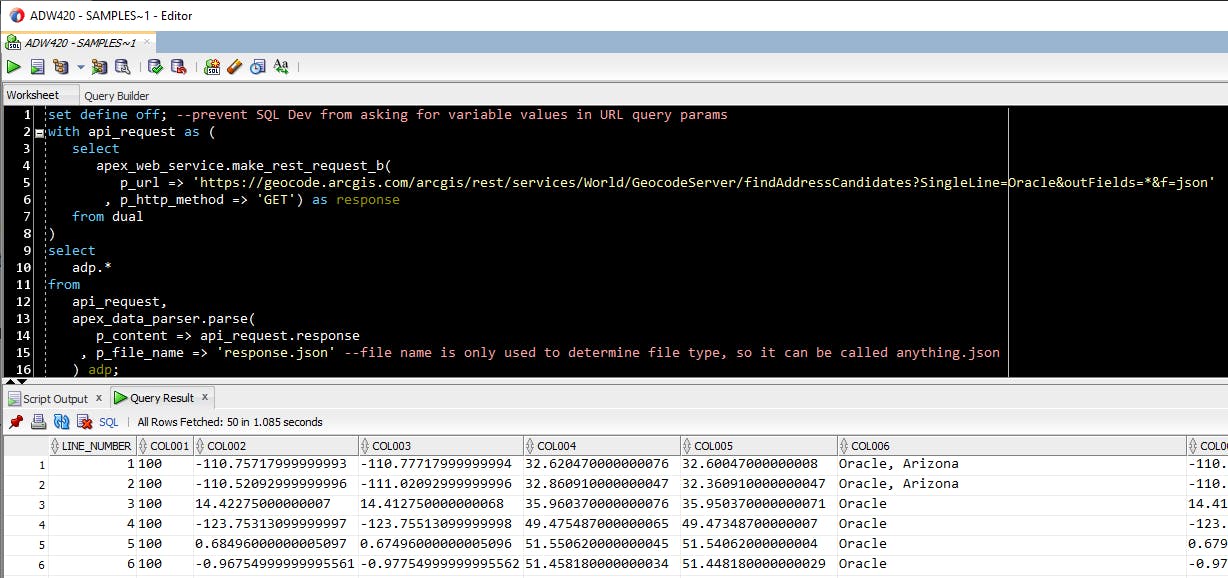

Example:

set define off; --prevent SQL Dev from asking for variable values in URL query params

with api_request as (

select

apex_web_service.make_rest_request_b(

p_url => 'https://geocode.arcgis.com/arcgis/rest/services/World/GeocodeServer/findAddressCandidates?SingleLine=Oracle&outFields=*&f=json'

, p_http_method => 'GET') as response

from dual

)

select

adp.*

from

api_request,

apex_data_parser.parse(

p_content => api_request.response

, p_file_name => 'response.json' --file name is only used to determine file type, so it can be called anything.json

) adp;

Assuming you successfully parsed the content you wanted with APEX_DATA_PARSER, all you need to do next is run:

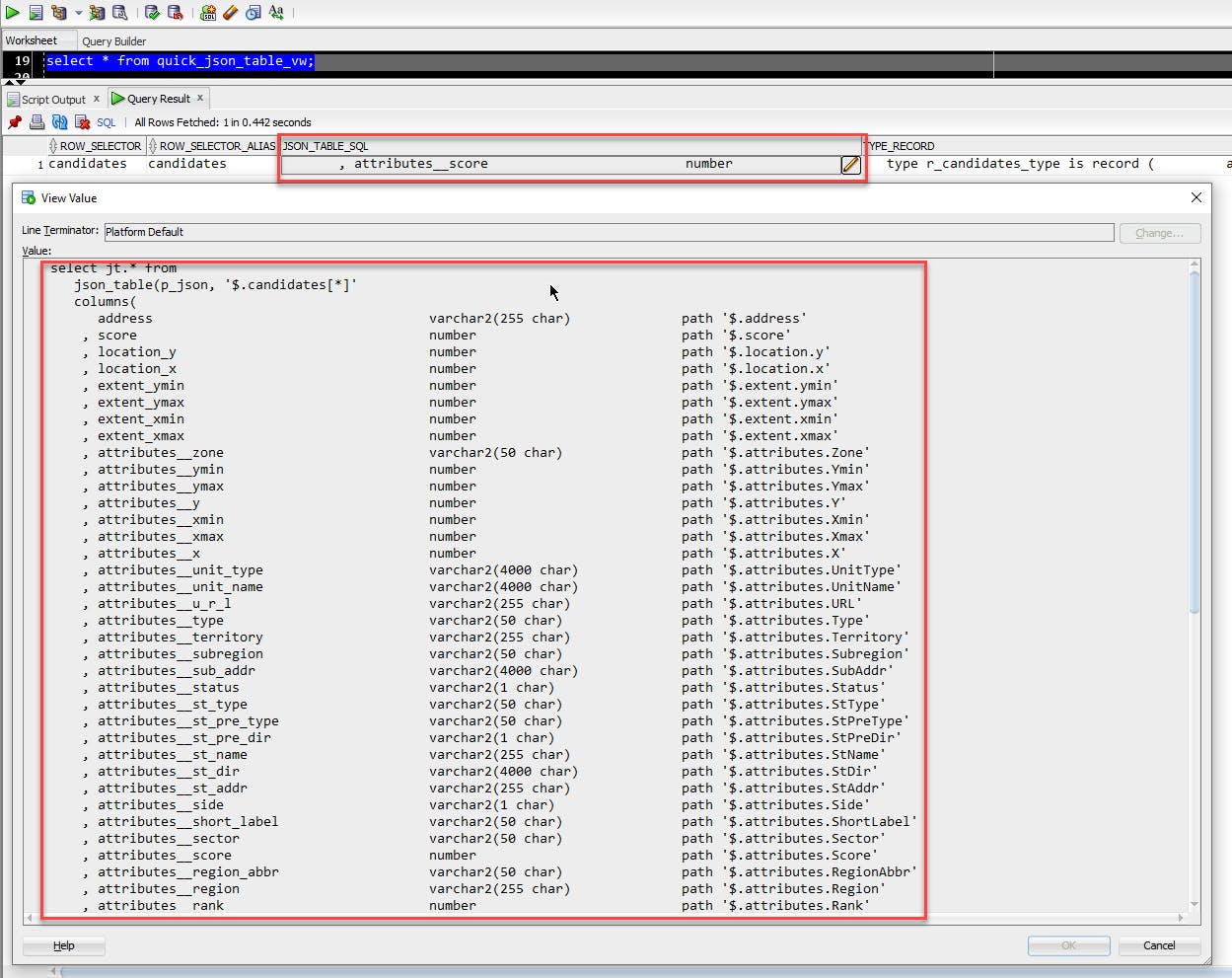

select * from quick_json_table_vw;

The view returns multiple columns, the main one is "JSON_TABLE_SQL," which contains the generated JSON_TABLE query:

Hopefully, the only modification you'll need to make is to change "p_json" to whatever you need to pass your JSON doc to the JSON_TABLE statement. If you're wrapping this code in function and pass the JSON through a parameter called p_json, then you should be good as-is.

The other columns provide the building blocks for creating a pipelined table function to parse your JSON and is meant to be added to a package, but that's a topic for another blog post, or 4.